Collecting statistics from XHTML pages

- Relational pipes

- Principles

- Roadmap

- FAQ

- Specification

- Implementation

- Examples

- License

- Screenshots

- Download

- Support & contact

The relpipe-in-filesystem and the xpath streamlet allows us to extract multiple values (attributes) from XML files.

We can use this feature to collect data from e.g. XHTML pages.

#!/bin/bash

XMLNS_H="http://www.w3.org/1999/xhtml"

# If we set xmlns_h="…", we can omit: --option xmlns_h "$XMLNS_H"

# because XML namespaces can be provided either as an option or as an environment variable.

# Options have precedence.

findFiles() {

find -print0;

}

fetchAttributes() {

relpipe-in-filesystem \

--parallel 8 \

--file name \

--streamlet xpath \

--option xmlns_h "$XMLNS_H" \

--option attribute '.' --option mode boolean --as 'valid_xml' \

--option attribute 'namespace-uri()' --as 'root_xmlns' \

--option attribute '/h:html/h:head/h:title' --as 'title' \

--option attribute 'count(//h:h1)' --as 'h1_count' \

--option attribute 'count(//h:h2)' --as 'h2_count' \

--option attribute 'count(//h:h3)' --as 'h3_count'

}

filterAndOrder() {

relpipe-tr-sql \

--relation "pages" \

"SELECT

name,

title,

h1_count,

h2_count,

h3_count

FROM filesystem WHERE root_xmlns = ?

ORDER BY h1_count + h2_count + h3_count DESC

LIMIT 5" \

--type-cast 'h1_count' integer \

--type-cast 'h2_count' integer \

--type-cast 'h3_count' integer \

--parameter "$XMLNS_H";

}

findFiles | fetchAttributes | filterAndOrder | relpipe-out-gui -title "Pages and titles"

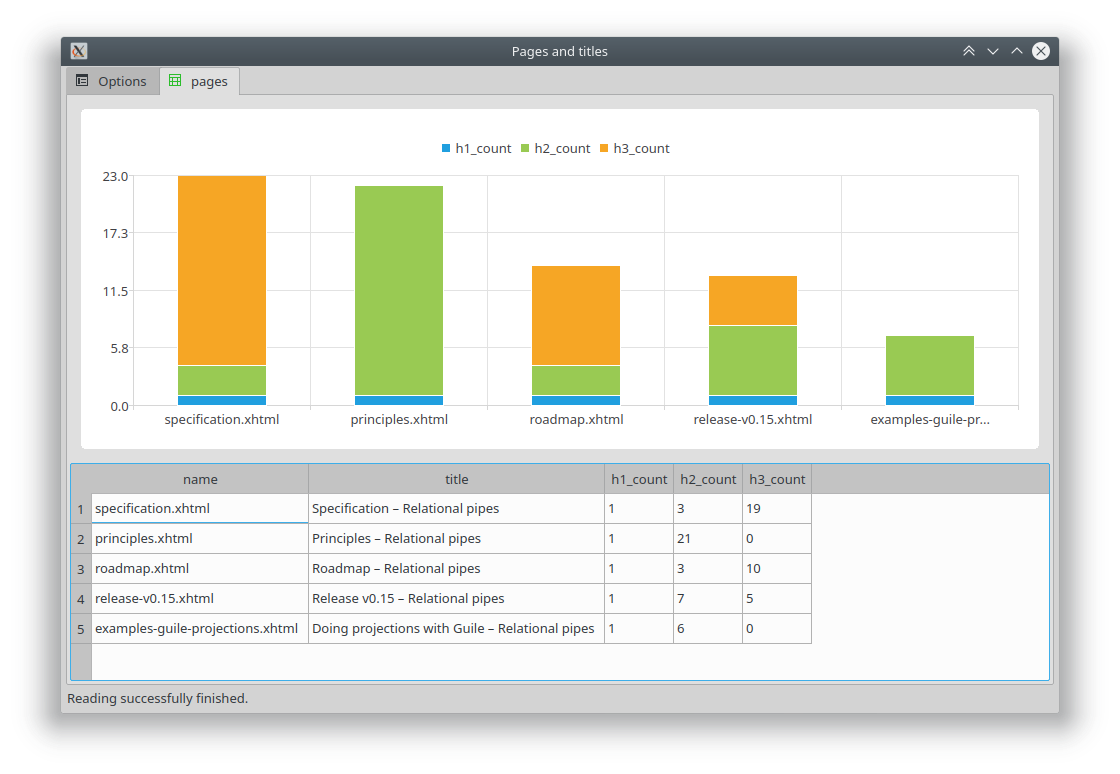

The script above will show this barchart and statistics:

This pipeline consists of four steps:

-

findFiles– prepares the list of files separated by\0byte; we can add-iname '*.xhtml'if we know the extension and make the pipeline more efficient -

fetchAttributes– does the heavy work – tries to parse each given file as a XML and if valid, extracts several values specified by the XPath expressions; thanks to--parallel Noption, utilizes N cores of our CPU; we can experiment with the N value and look how the total time decreases -

filterAndOrder– uses SQL to skip the records (files) that are not XHTML and takes five valid files with most number of headlines -

relpipe-out-gui– displays the data is a GUI window and generates a bar chart from the numeric values (we could use e.g.relpipe-out-tabularto display the data in the text terminal or format the results as XML, CSV or other format)

We can use a similar pipeline to extract any values from any set of XML files (e.g. Maven POM files or WSDL definitions).

Using --option mode raw-xml we can extract even sub-trees (XML fragments) from the XML files, so we can collect also arbitrarily structured data, not only simple values like strings or booleans.

Relational pipes, open standard and free software (C) 2018-2025 GlobalCode